Table of Contents

ToggleIntroduction of Evolution of Operating System

We may observe that a computer user interacts directly with a computer in the modern world. Yet, consumers were unable to communicate directly with computer hardware in the past. At first, the computer had to follow the commands that the users gave it. Following some progression, users once prepared their instructions as jobs on an offline device like punch cards and submitted them to the computer operator.

Following that, users were able to interact with operating systems using a program known as the command-line interface, or CLI. The user experience is more convenient today thanks to the advent of Graphical User Interface (in contemporary operating systems).

What is the Evolution of Operating System?

Let’s first gain a basic understanding of operating systems before studying about their evolution.

An operating system is nothing more than a piece of software that serves as a line between users, application software, and hardware. The management of all computer resources, security, file systems, etc., is the primary goal of an operating system. As a result, we may sum up the operating system’s role as providing a platform for application software and other system software to carry out their respective functions.

Four distinct generations can be identified when looking at the operating system’s development in its entirety. Let’s briefly go over the evolution of operating system generation-based evolution using their timeline.

Also, have a look at the Best data analyst course in Bangalore

The Initial Generation of Evolution of Operating System

During the development of electronic computing systems, from 1945 to 1955, the first generation evolution of operating system was in use. It was the age of mechanical computing systems, in which the computer had to follow the instructions provided by the users or programmers (through punch cards, paper tape, magnetic tape, etc.). Now that there was human involvement, the process was exceedingly slow and there was a risk of error.

We can argue that at that time, computers lacked an operating system, and users instead used to upload programs directly to the hardware. Thus, the first-generation operating systems had issues with slower performance and more errors. That’s the first evolution of operating system

Second Generation of Evolution of Operating System

Between 1955 to 1965, when the batch operating system was being developed, the second generation evolution of operating systems was in operation. Users used to create their instructions (tasks or jobs) in the form of jobs on an offline device like punch cards and then submit them to the computer operator during the second generation phase. Now, comparable punch cards for jobs were pooled and executed as a group to speed up the entire process of these punch cards (these punch cards were collated into instructions for computers). Jobs were composed of program code, input data, and control instructions.

Creating jobs or programs and then transferring them to the operator in the form of punch cards was the main responsibility of the programmer or developer. An operator was now responsible for grouping programs with comparable requirements into batches.

- The second-generation operating system had a number of significant flaws, including the inability to prioritize tasks because they were only scheduled based on their similarity.

- The Processor became idle because it was not used to its full capability (when the operator was loading jobs).

Also recommended: Best data analyst course in Delhi

Third Generation of Evolution of Operating System

From 1965 and 1980, when multiprogramming operating systems were being developed, the third generation of the operating system was in use. Many users can be served simultaneously by the third-generation evolution of operating system (multi-users). With the use of software known as a command-line interface, people could communicate with the operating systems during this time. Hence, computers evolved into multi-user, multi-programming devices.

Fourth Generation of Evolution of Operating System

Since 1965, the operating system’s fourth iteration has been in use. Users could communicate with the operating system before the development of the fourth-generation evolution of operating systems, but they had to rely on command line interfaces, punch cards, magnetic tapes, etc. As a result, the user was required to give commands (which required memory), which was frantic.In light of this, the advent of the GUI led to the creation of the fourth generation of operating systems (Graphical User Interface). The user experience was improved by the GUI.

The era of distributed operating systems, which is the fourth generation of operating systems, can potentially come to an end. Currently, operating systems are present in mobile devices, smartwatches, fitness bands, smart glasses, and VR gear.

Feature of Evolution of Operating System

Let’s talk about the evolution of operating systems based on features now that we have seen how they have changed over time based on generation (how features got developed over the years).

Continuous Processing in Evolution of Operating System

Until the 1950s, computers lacked an operating system, and users would manually input programmes into the machine. Thus, the speed decreased, and errors increased (as serial processing is done on a single machine). So, the programmers or developers had to supply the full programme as sequential instructions on a punched card. These punched cards were first converted into a card reader, after which the operating system received them.

The whole execution time was excessively long and wasteful as a result of the complicated process of running a straightforward programme and human involvement. Further issues included a lack of user involvement, the execution of only one task at a time, and others.

Related Blog: Mind Blowing Features of Operating System in Software

The serial operating system had several significant flaws, namely

- not interacting with the computer system.

- little memories.

- The execution of the software took a long time.

- At any given time, only one programme could be running.

- Whenever one software was running, the user could not run another.

Processing in Bulk in the Evolution of Operating System

We may observe that a computer user interacts directly with a computer in the modern world. The users, however, were unable to actively communicate with the computer system in the past (1955–1955).

A batch operating system was a sort of operating system that was utilized in the past. Users would prepare their instructions (tasks or jobs) in the form of jobs and submit them to the computer operator using an offline method like punch cards. To speed up the process, related punch cards from the same occupations were now pooled and executed as a group.

Creating jobs or programmes and then transferring them to the operator in the form of punch cards was the main responsibility of the programmer or developer. An operator was now responsible for grouping programmes with comparable requirements into batches. This two-step process and operator involvement made the batch operating system a slow one. Card readers or tape drives were the equipment used for input and output the most frequently.

The same jobs in the batch were done more quickly due to the scheduling of similar tasks using the Batch operating system. Due to the jobs’ sequential scheduling, whenever one is finished, the subsequent job from the job spool is run without delay, because the batch operating system was so straightforward, it always existed in memory and performed the primary function of passing control from one job to another.

The batch operating system has the following significant flaws

- Because the jobs were simply scheduled based on similarities, we were unable to determine their order of priority.

- When the CPU became inactive while the operator was loading workloads, it was not used to its full capability.

Multiprogramming in Evolution of Operating System

With the help of a single processor, multiple tasks or processes can be loaded into main memory simultaneously under the multiprogramming operating system (a single processing unit was there and programmes were scheduled on a certain basis). Now, it was the responsibility of the operating system’s job scheduler to schedule these operations so that the greatest number of operations can be completed in the shortest amount of time. Hence, the multiprogramming operating system’s primary goal was to execute multiple programmes simultaneously while utilizing the CPU and memory more effectively.

A Multiprogramming Operating System has the following advantages:

- It is possible to load many processes into the main memory.

- Both main and secondary data might be used by the multiprogramming operating system.

- Mechanism for Sharing Time in evolution of operating system

System for Parallel Processing in Evolution of Operating System

There were multiple processors present in the operating system for parallel processing. As a result, multiple tasks or processes can be loaded into the main memory at once and run concurrently by all of the processors. A process in a parallel processing operating system is broken down into numerous smaller processes, or threads, and these threads are spread among the system’s various processors for execution For this reason Multiprogramming exists.

As a result, parallel processing completes the task faster. This system was referred to as a parallel processing operating system because numerous processors execute the task in parallel.

An operating System for Parallel Processing has the following advantages:

1. Spreading System

A distributed operating system has connections between two or more nodes. The distributed operating system is also referred to as the loosely coupled operating system because although there are several connected nodes, the processors do not share a clock or memory. These nodes can speak to one another across telephone lines, fast buses, etc.

The distributed operating system has the following advantages:

- The other network communications won’t be impacted if one of the computer systems (nodes) fails.

- The speed of processing increased when a node’s resources were pooled, making it faster, more accessible, and more reliable

2. The Mechanism for Sharing Time

Several tasks or processes can be loaded into the main memory at once under the time-sharing operating system, which also allows multiple users to share the machine. As a result, we can conclude that the time-sharing evolution of operating system was a natural progression from the multiprogramming operating system. The term “time-sharing” was used since the operating system’s developer required that all processes share an equal amount of time.

The time-sharing evolution of operating system’s primary goal was to speed up overall process response time. The CPU’s utilization improved over the multiprogramming operating system because it could run multiple tasks while giving each one equal time.

Highly recommended blog: What is Disk Management in OS

History of Evolution of Operating System

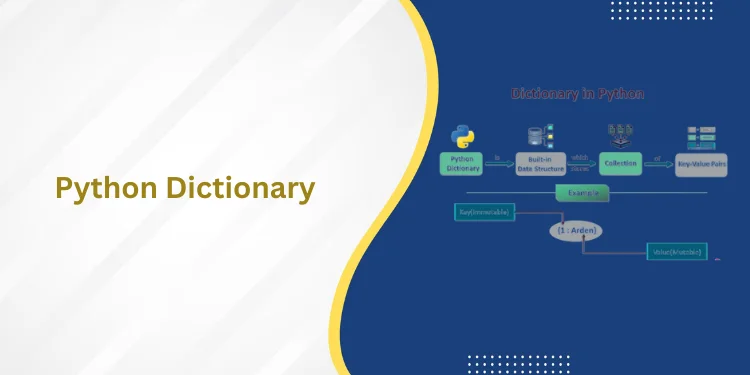

A system application known as the operating system acts as a conduit between the computing system and the user. Operating systems design a setting where the user can use any program and interact with other programs or applications in a convenient and structured manner.

Moreover, an operating system is a piece of software that governs and regulates how computer hardware, software resources, and application applications are used. Input/output, memory, input/output, and various peripheral devices like a disc drive, printers, etc. are just a few of the software and hardware resources it aids in managing. Popular operating systems include: OSs such as Linux, Windows, Mac, VMS, and OS/400

Conclusion

At first, the computer had to follow the commands that the users gave it. Users once prepared their instructions as jobs on punch cards and submitted them to the computer operator after some time. Following that, users were able to interact with operating systems through software known as terminals. The user experience has improved with the emergence of graphic user interfaces, with the aid of a single processor, multiple processes can be loaded into the main memory at once under the multiprocessor operating system.

Frequently Asked Questions

What is the first step in the evolution of operating system?

Continuous Processing

The first step in the evolution of operating system evolved between 1940 and 1950 as programmers are integrated by the hardware without the use of an operating system. The scheduling and setup time are the issues here. By wasting the computed time, the user logs in for machine time.

The loading of the compiler, saving of the compiled programme, source programme, linking, and buffering all require setup time. In the event of an intermediate error, the process is restarted.

Which instruction will execute first and which instruction will execute next in this case are determined by the programme counter. Punch Cards are primarily used for this. In this, each job is first prepared and stored on a card, which is then inserted into the system, where each instruction is then carried out one at a time. Yet, the main issue is that a user cannot provide data for execution because they are not interacting with the system while using it.

Why is it necessary for evolution of operating system ?

Evolution of Operating system has developed from slow, expensive systems to today’s technology, which has exponentially increasing computational power and comparatively low costs. Initially, programme code to control computer operations and execute business logic was manually inserted into computers.

Problems with programme scheduling and setup time were brought on by this sort of computation. Computer scientists realised they required a method to boost convenience, effectiveness, and growth as more users requested more computer time and resources (Stallings, 2009, p. 51). They thus developed an operating system (OS) that handles jobs in batches.

Eventually in the evolution of operating system, they developed time-sharing and multitasking to manage numerous tasks and allow user engagement to boost productivity. It became difficult to manage the I/O operations needed by several jobs as a result of multitasking.

What is operating system history and evolution of OS?

In the evolution of operating system computers could only run one programme at a time in the 1950s, the first operating systems were created. Later in the succeeding decades, computers started to come with an increasing number of software applications, commonly referred to as libraries, which came together to form the foundation of today’s operating systems.

The first iteration of the Unix operating system was created in the late 1960s. It was created using the C programming language and was initially made accessible for free. The new systems were easily adapted to Unix, which immediately gained widespread appeal.

Numerous contemporary operating systems, including Apple OS X and all variations of Linux, come from or are dependent upon the Unix OS.

Which is the first operating system?

The IBM 704 was equipped with the GM-NAA I/O operating system, which was created by General Motors’ Research division in 1956[4]. [5] [specify] The majority of the early mainframe operating systems for IBM were also created by customers.

Each vendor or customer produced one or more operating systems unique to their particular mainframe computer, which resulted in a very diversified early operating system market. Each operating system—even those made by the same vendor—can have wildly diverse models for instructions, running processes, and tools like debugging aids.

Generally, there would be a new evolution of operating system and most applications would need to be manually updated, recompiled, and retested every time the manufacturer released a new computer.

What is the OS’s primary purpose?

The OS acts as a conduit between the user and computer hardware. Controlling files and directories is an operating system’s (OS) primary duty. The operating system of a computer is in charge of managing files. This includes all operations including opening, closing, and deleting files.

An operating system is a piece of software that manages programmes and acts as a communication channel (interface) between the user and computer hardware.

An operating system’s main responsibility is to distribute services and resources, such as hardware, memory, processors, and data. The operating system has several components to manage these resources, including a traffic controller, a scheduler, a memory management module, a file system, and I/O programmes.